Images generated by artificial intelligence are becoming more convincing and prevalent, and they could lead to more complicated court cases if the synthetic media is submitted as evidence, legal experts say.

“Deepfakes” often involve editing videos or photos of people to make them look like someone else by using deep-learning AI. The technology broadly hit the public’s radar in 2017 after a Reddit user posted realistic-looking pornography of celebrities to the platform.

The pornography was revealed to be doctored, but the revolutionary tech has only become more realistic and easier to make in the years since.

For legal experts, deepfakes and AI-generated images and videos will likely cause massive headaches in the courts – which have bracing for this technology for years. The ABA Journal, the flagship publication of the American Bar Association, published a post in 2020 that warned how courts across the world were struggling with the proliferation of deepfake images and videos submitted as evidence.

HOW DEEPFAKES ARE ON VERGE OF DESTROYING POLITICAL ACCOUNTABILITY

Images generated by artificial intelligence are becoming more convincing and prevalent, and they could lead to more complicated court cases if the synthetic media is submitted as evidence, legal experts say. (Reuters / Dado Ruvic / Illustration)

“If a picture’s worth a thousand words, a video or audio could be worth a million,” Jason Lichter, a member of the ABA’s E-Discovery and Digital Evidence Committee, told the ABA Journal at the time. “Because of how much weight is given to a video by a factfinder, the risks associated with deepfakes are that much more profound.”

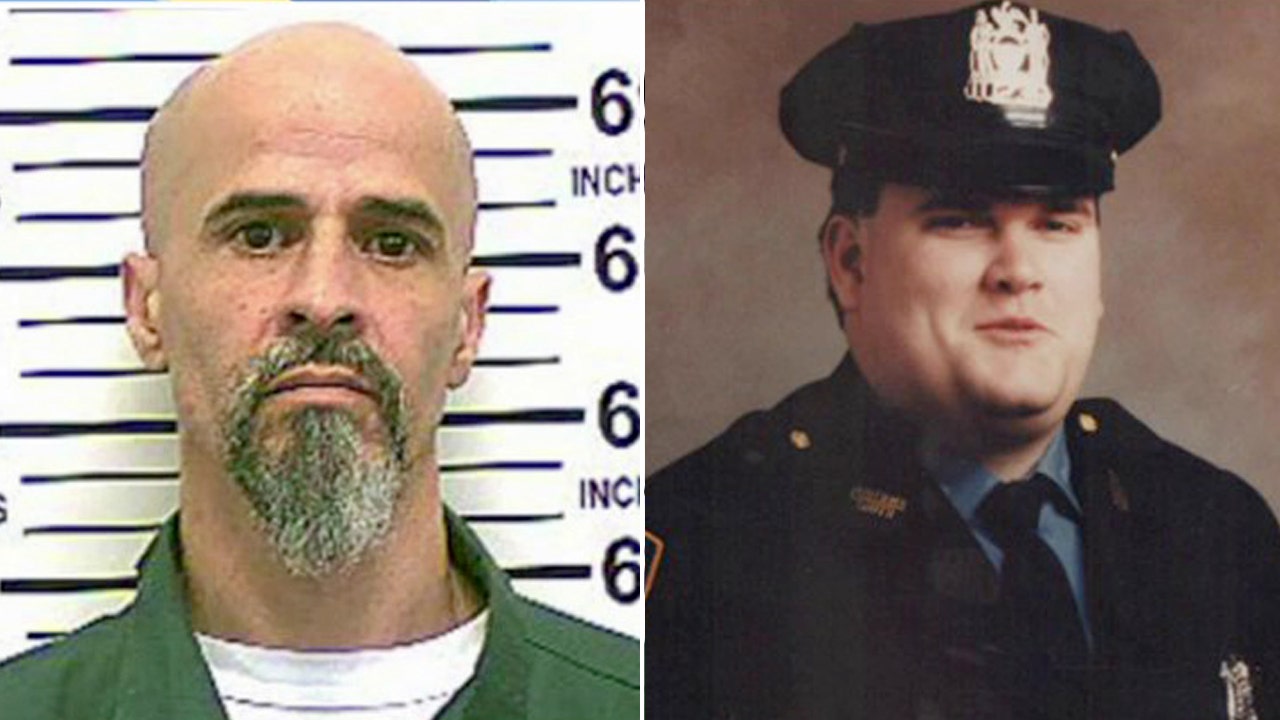

Deepfakes submitted as evidence in a court case have already cropped up. In the U.K., a woman who accused her husband of being violent during a custody battle was found to have doctored audio she submitted as proof of his violence.

CALIFORNIA BILL WOULD CRIMINALIZE AI-GENERATED PORN WITHOUT CONSENT

Two men charged with participating in the Jan. 6, 2021, Capitol riot claimed that video of the incident may have been created by AI. And lawyers for Elon Musk’s Tesla recently argued that a video of Musk from 2016, which appeared to show him touting the self-driving features of the cars, could be a deepfake after a family of a man who died in a Tesla sued the company.

A professor at Newcastle University in the U.K. argued in an essay last year that deepfakes could become a problem for low-level offenses working their way through the courts.

“Although political deepfakes grab the headlines, deepfaked evidence in quite low-level legal cases – such as parking appeals, insurance claims or family tussles – might become a problem very quickly,” law professor Lillian Edwards wrote in December. “We are now at the beginning of living in that future.”

Edwards pointed to how deepfakes used in criminal cases will likely also become more prevalent, citing a BBC report on how cybercriminals used deepfake audio to swindle three unsuspecting business executives into transferring millions of dollars in 2019.

Artificial Intelligence is hacking data in the near future. (iStock)

In the U.S. this year, there have been a handful of warnings from local police departments about criminals using AI to try to get ransom money out of families. In Arizona, a mother said last month that criminals used AI to mimic her daughter’s voice to demand $1 million.

The ABA Journal reported in 2020 that as the technology becomes more powerful and convincing, forensic experts will be saddled with the difficult task of deciphering what’s real. This can entail analyzing the photos of videos for inconsistencies, such as the presence of shadows or authenticating images directly from a camera’s source.

MUSK WARNS OF AI’S IMPACT ON ELECTIONS, CALLS FOR US OVERSIGHT: ‘THINGS ARE GETTING WEIRD … FAST’

With the rise of the new technology also comes the worry that people will more readily claim any evidence was AI-generated.

The Supreme Court (AP Photo / Jacquelyn Martin / File)

CRYPTO CRIMINALS BEWARE: AI IS AFTER YOU

“That’s exactly what we were concerned about: That when we entered this age of deepfakes, anybody can deny reality,” Hany Farid, a digital forensics expert and professor at the University of California, Berkeley, told NPR this week.

Earlier this year, thousands of tech experts and leaders signed an open letter that called for a pause on research at AI labs so policymakers and industry leaders could craft safety precautions for the technology. The letter argued that powerful AI systems could pose a threat to humanity, and it also noted that the systems threaten the spread of “propaganda.”

CLICK HERE TO GET THE FOX NEWS APP

“Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth?” states the letter, which was signed by tech leaders such as Musk and Apple co-founder Steve Wozniak.