Google CEO Sundar Pichai, arrives for a US Senate bipartisan Artificial Intelligence (AI) Insight Forum at the US Capitol in Washington, DC, on September 13, 2023.

Mandel Ngan | AFP | Getty Images

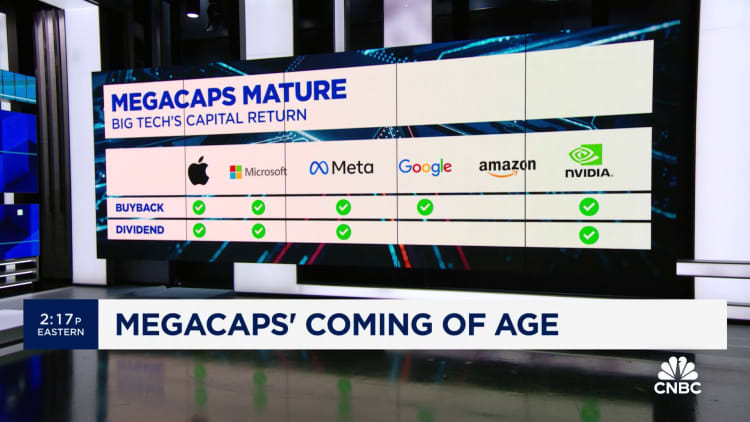

It was a big week for tech earnings, with Microsoft, Meta, Alphabet, Amazon and Apple all reporting over the past few days. Artificial intelligence was on everyone’s lips.

One theme investors heard repeatedly from top execs is that, when it comes to AI, they have to spend money to make money.

“We move from talking about AI to applying AI at scale,” Microsoft CEO Satya Nadella said on his company’s earnings call on Tuesday. “By infusing AI across every layer of our tech stack, we are winning new customers and helping drive new benefits and productivity gains.”

Last year marked the beginning of the generative AI boom, as companies raced to embed increasingly sophisticated chatbots and assistants across key products. Nvidia was the big moneymaker. Its graphics processing units, or GPUs, are at the heart of the large language models created by OpenAI, Alphabet, Meta and a growing crop of heavily funded startups all battling for a slice of the generative AI pie.

As 2024 gets rolling and executives outline their plans for ongoing investment in AI, they’re more clearly spelling out their strategies to investors. One key priority area, based on the latest earnings calls, is AI models-as-a-service, or large AI models that clients can use and customize according to their needs. Another is investing in AI “agents,” a term often used to describe tools ranging from chatbots to coding assistants and other productivity tools.

Overall, executives drove home the notion that AI is no longer just a toy or a concept for the research labs. It’s here for real.

Cutting costs to make room for AI

At the biggest companies, two huge areas for investment are AI initiatives and the cloud infrastructure needed to support massive workloads. To get there, cost cuts will continue happening in other areas, a message that’s become familiar in recent quarters.

Meta CEO Mark Zuckerberg on Thursday emphasized the company’s continued AI efforts alongside broader cost cuts.

Meta founder and CEO Mark Zuckerberg speaks during Meta Connect event at Meta headquarters in Menlo Park, California on September 27, 2023.

Josh Edelson | AFP | Getty Images

“2023 was our ‘year of efficiency’ which focused on making Meta a stronger technology company and improving our business to give us the stability to deliver our ambitious long-term vision for AI and the metaverse,” Zuckerberg said on the earnings call.

Nadella told investors that Microsoft is committed to scaling AI investment and cloud efforts, even if it means looking closely at expenses in other departments, with “disciplined cost management across every team.”

Microsoft CFO Amy Hood underlined the “consistency of repivoting our workforce toward the AI-first work we’re doing without adding material number of people to the workforce,” and said the company will continue to prioritize investing in AI as “the thing that’s going to shape the next decade.”

The theme was similar at Alphabet, where Sundar Pichai spoke of his company’s “focus and discipline” as it prioritizes scaling up AI for Search, YouTube, Google Cloud and beyond. He said investing in infrastructure such as data centers is “key to realizing our big AI ambitions,” adding that the company had cut nonpriority projects and invested in automating certain processes.

“We continue to invest responsibly in our data centers and compute to support this new wave of growth in AI-powered services for us and for our customers,” Pichai said. “You’ve heard me talk about our efforts to durably reengineer our cost base and to improve our velocity and efficiency. That work continues.”

Within Google Cloud, Pichai said the company would cut expenses by reallocating resources to the most important projects, slowing the pace of hiring, improving technical infrastructure and using AI to streamline processes across Alphabet. Capital expenditures, which totaled $11 billion in the fourth quarter, were largely due to investment in infrastructure, servers and data centers, he said.

Ruth Porat, Alphabet’s finance chief, reiterated that the company expects full-year capital expenditures for 2024 to be “notably larger than 2023,” as it continues to invest heavily in AI and the “long-term opportunity” that AI applications inside DeepMind, Cloud and other systems offer.

Amazon CEO Andy Jassy said on this week’s earnings call that generative AI “will ultimately drive tens of billions of dollars of revenue for Amazon over the next several years.”

AI will continue to be a heavy investment area for the company, driving an increase in capital expenditures this year as Amazon pours more money into LLMs, other generative AI projects, and the necessary infrastructure. Jassy emphasized Amazon’s AI chip efforts, naming customers such as Anthropic, Airbnb, Hugging Face, Qualtrics and Snap.

Apple CEO Tim Cook pointed to generative AI as a significant investment area for his company, teasing an announcement later this year.

“As we look ahead, we will continue to invest in these and other technologies that will shape the future,” Cook said during a call with analysts. “That includes artificial intelligence where we continue to spend a tremendous amount of time and effort, and we’re excited to share the details of our ongoing work in that space later this year.”

Cook added, “Let me just say that I think there’s a huge opportunity for Apple with Gen AI and AI, without getting into more details and getting out in front of myself.”

Where the money is flowing

While investors want to see investments in AI by the companies that are key to providing the infrastructure, they also want to see where and how money is being made.

Jassy said enterprise clients are looking to use existing models that they can personalize and build on, pointing to Amazon’s Bedrock as a key focus.

“What we see is that customers want choice,” Jassy said. “They don’t want just one model to rule the world. They want different models for different applications. And they want to experiment with all different-sized models because they yield different cost structures and different latency characteristics.”

Andy Jassy on stage at the 2022 New York Times DealBook in New York City, November 30, 2022.

Thos Robinson | Getty Images

Nadella pointed to Microsoft Azure as a predominant “model as a service” offering, emphasizing that customers don’t have to manage underlying infrastructure yet have access to a range of large and small language models, including some from Cohere, Meta and Mistral, as well as open-source options. One-third of Azure AI’s 53,000 customers joined within the past 12 months, Nadella said.

Alphabet executives highlighted Vertex AI, a Google product that offers more than 130 generative AI models for use by developers and enterprise clients such as Samsung and Shutterstock.

Chatter wasn’t limited to LLMs and chatbots. Many tech execs talked about the importance of AI agents, or AI-powered productivity tools for completing tasks.

Eventually, AI agents could potentially take the form of scheduling a group hangout by scanning everyone’s calendar to make sure there are no conflicts, booking travel and activities, buying presents for loved ones or doing a specific job function such as outbound sales. Currently, though, the tools are largely limited to tasks like summarizing, generating to-do lists or helping write code.

Nadella is bullish on AI agents, pointing to Microsoft’s Copilot assistant as an example of an “evolved” AI application in terms of productivity benefits and a successful business model.

“You are going to start seeing people think of these tools as productivity enhancers,” Nadella said. “I do see this as a new vector for us in what I’ll call the next phase of knowledge work and frontline work, even in their productivity and how we participate.”

Just before Amazon’s earnings hit, the company announced Rufus, a generative AI-powered shopping assistant trained on the company’s product catalog, customer reviews, user Q&A pages and the broader web.

“The question about how we’re thinking about Gen AI in our consumer businesses: We’re building dozens of generative AI applications across the company,” Jassy said on the call. “Every business that we have has multiple generative AI applications that we are building. And they’re all in different stages, many of which have launched and others of which are in development.”

Meta will also be focused, in part, on building a useful AI agent, Zuckerberg said on his company’s call.

“Moving forward, a major goal will be building the most popular and most advanced AI products and services,” Zuckerberg said. “And if we succeed, everyone who uses our services will have a world-class AI assistant to help get things done.”

Alphabet executives touted Google’s Duet AI, or “packaged AI agents” for Google Workspace and Google Cloud, designed to boost productivity and complete simple tasks. Within Google Cloud, Duet AI assists software developers at companies like Wayfair and GE, and cybersecurity analysts at Spotify and Pfizer, Pichai said. He added that Duet AI will soon incorporate Gemini, Alphabet’s LLM that powers its Bard chatbot.

Pichai wants to offer an AI agent that can complete more and more tasks on a user’s behalf, including within Google Search, though he said there is “a lot of execution ahead.”

“We will again use generative AI there, particularly with our most advanced models and Bard,” Pichai said. That “allows us to act more like an agent over time, if I were to think about the future and maybe go beyond answers and follow-through for users even more.”